Alert fatigue can quickly get out of hand when you’re managing a highly-scaled enterprise edge device fleet. Multiple teams, management tools, and software stacks can generate a tidal wave of spam in your inbox, making it almost impossible to actually determine which alerts matter when you log on first thing in the morning. In this post, we’ll help you define a strategy and the tactics necessary to combat alert fatigue.

What is Device Management Alert Fatigue? What Causes it?

You have alert fatigue when the quantity OR quality of alerts received results in a substantial number of those alerts being ignored. Put another way: Alert fatigue is the product of a poor signal (actionable alert) to noise (non-actionable alert) ratio. That ratio gets out of balance when you have a disproportionate number of recoverable states being flagged to IT teams, versus non-recoverable states. Before we get into a strategy for addressing alert fatigue, let’s define all of these terms.

What’s an actionable alert?

An actionable alert is any alert which results in the recipient of that alert taking a concrete step to investigate or remediate an issue. Some examples of actionable alerts include:

- A device has been offline for >X time

- A device has left a restricted geofence area for >X time

- A device has locked itself to prevent tampering

- A device has a hardware fault (e.g., battery failure, overheat, peripheral failure)

- A device has failed to update content or configuration after >X number of attempts over >Y time

- A device is unable to connect to a peripheral after >X number of attempts

All of the above are scenarios where IT personnel would be required to, at least, begin an investigation of the issue to determine if a formal ticket should be raised. Actionable alerts always demand a human being do something in response to the information in that alert (even if that alert may end up being a “dead end”).

What are non-actionable alerts?

A non-actionable alert is any alert which does not clearly indicate an ongoing issue requiring intervention or otherwise meet the threshold for formal investigation. Some examples of non-actionable alerts include:

- Chronic spikes in CPU, memory, or disk usage

- Recoverable exceptions: Device reboot, app crash, peripheral disconnect, temporary loss of Wi-Fi, cellular, or power

- Failed supervisor / admin password entry attempts

- Device drifts from known good configuration

- Device security policy or patch out of date

All of these are non-actionable alerts, even if some of them could mean a device is experiencing an issue. The core distinction here is that none of the above alerts are direct evidence of an ongoing problem. Rather, they are circumstantial or transient evidence of a problem.

If a device’s security patch is out of date, is it actually a problem if this is true of every device in your fleet, and you’ve paused the update? If a peripheral disconnects or a device reboots unexpectedly, do you know there was actually a problem? These questions are good jumping off points for the concepts of recoverable and non-recoverable states.

What are recoverable states?

Recoverable states are device exceptions or failure modes that self-correct, or are otherwise transient. That self-correction could occur on the device itself via normal behavior (e.g., rebooting after a kernel panic), through custom configuration (e.g., a script that turns Bluetooth off and back on again when a peripheral disconnects), or via device management (e.g., a failed app install causes your MDM to attempt the install again the next day).

All of the above examples of recoverable states should not result in an alert condition, as they are non-actionable. They should be logged and recorded as events so that they are preserved for audits or investigation later (after all, they could be critical evidence of chronic issues), but recoverable states should never be treated as “code red” incidents raised like alerts requiring human intervention.

What are non-recoverable states?

Non-recoverable states are device exceptions or failure modes that do not self-correct and require direct intervention to remediate. Remediation could mean remoting into a device (e.g., to pull a log or enter admin mode), calling a staff member on-site to troubleshoot (e.g., to physically check peripheral connections), or replacing the device and sending it back to the lab for repair or analysis (e.g., for BSOD-like conditions).

Nonrecoverable states are always actionable, and should always result in an alert condition being raised. Nonrecoverable states almost always mean a device is not functioning in its intended business capacity, and thus presents an ongoing operational risk that must be remedied as soon as possible.

How to Build an Actionable Alert Framework, And Why You Should

When you receive too many non-actionable alerts, your team will likely start suffering from alert fatigue. Non-actionable alerts take up too much space in the feed, and actionable alerts can go unnoticed or be erroneously “filtered out” by your team. That means devices in active failure modes could persist in those states for hours, days, or weeks until someone in the field hand-raises the problem.

Step one: The actionable alert framework Q&A

Instead of adopting the “alert for everything, then filter and triage” approach, it’s crucial to build alerts on an actionable versus non-actionable framework. Remember these key questions as you define your actionable alert strategy:

- Does this alert indicate a non-recoverable state with a clear investigation and escalation path?

- Yes: A device has been offline for 48 hours, and is still not reachable (nonrecoverable)

- No: A device went offline for 30 minutes yesterday, but is now “green” (recoverable)

- Is this alert direct evidence of a problem, or is it merely circumstantial evidence?

- Yes: A device throws an error indicating repeated, ongoing connection failure with a peripheral after a recent update.

- No: A peripheral disconnected from a device three times over the course of one day.

- What proportion of false positives do we think we’ll receive for this alert based on the threshold and parameters defined?

- High false positive propensity: Send an alert every time an app fails to install on a device.

- Low false positive propensity: Send an alert if an app fails to install on a device after X number of attempts over Y period of time.

- Are we misusing an alert as a substitute for a fleet health or visibility metric?

- Metric misuse: Sending an alert every time a device reaches >X% disk usage or has >Y number of network interrupts per day.

- Proper alerting: Sending an alert when disk-full errors or network interruptions lead to multiple content or configuration update failures.

- Does this alert indicate a true operational risk?

- Operational risk: A device cannot install a critical content update that could lead to a functional outage if not addressed by [date].

- Not an operational risk: A device is throwing app crash errors a few times a day, but is otherwise functioning normally.

By aggressively leaning into the above considerations, you can seriously prune the number of alerts your team receives on a daily basis. You may find the “silence” deafening at first, but it’s crucial to remember that alerts are not a substitute for visibility or metrics monitoring. That requires a “fleet-first” management mindset, which we’ll get into next.

Step two: Manage your entire device fleet, not individual devices

One of the most challenging cultural shifts for IT teams to undergo is a “fleet first” device management mindset. In truth, this is easier said than done — tech stacks requiring multiple management frontends (Device management tool sprawl), multiple operating systems, and multiple vendor software stacks and custom integrations make deep fleet fragmentation a growing reality in the enterprise. When you don’t have any top-down visibility into your fleet’s core metrics (device health, uptime, usage, etc.), alerts can quickly become a crutch to try and normalize reporting across seemingly irreconcilable systems.

Read More: Why device visibility breaks down when fleets scale up

This is a very big challenge to confront on its own. There’s no quick or easy answer, but it’s important to adopt that cultural shift mindset (manage fleets, not devices) as your fleet continues to evolve and scale. If you accept tool sprawl and multiple OSes as fragmentation multipliers you cannot mitigate, that prophecy becomes self-fulfilling.

With a fleet-first approach to device management, your visibility grows immensely, and your alerting strategy becomes far more flexible (and scalable). With this approach, you can:

- Create alert conditions that must be satisfied over highly-defined groups of devices (OS, form factor, location), identifying patterns of behavior, not just one-offs.

- Define bespoke alert frameworks for events like software updates, where exception thresholds can be used to automatically widen or pause rollouts.

- Prioritize alerts based on key business functions, and retire metrics-as-alerts reporting strategies.

- Develop fleet-scale automated remediations, turning previously nonrecoverable events requiring manual intervention into recoverable ones that don’t even need to alert.

By moving to a fleet-first management mindset, you can seriously cut down on non-actionable alerts. And, more importantly, you can start to build an alerting strategy that draws on the visibility of your full fleet, leading to more data-driven decision making and fewer fire drills. And that’s when you’re ready to start building a proactive device health strategy.

Step three: Build a proactive device health strategy

When you achieve genuine fleet-level visibility, it’s easy to start cutting down on superfluous alerting — especially the alerts that just serve as stand-ins for device metrics reporting. When you’ve reached this level of management maturity, the next step is preventing genuine alert conditions before they fire. That means using those device health signals in more meaningful, data-driven ways.

With truly unified fleet management, you have far more common denominators to draw on when assessing the health of your devices. It becomes easier to start aggregating those health factors, and then piping that data into custom workflows. Not just for analysis, but for creating a dedicated device health alerting regime — flagging potential trouble spots before they become operational risks.

- Identify pre-failure signals: Increased boot time, app launch latency, and reboot frequency can be recorded as signals and trendlined against targets to development teams or vendors.

- Manage by device cohort: Create like-device (OS, SKU, vendor) groupings to more narrowly track core vitals and avoid fleet-scale data distortions.

- Automate drift control: Build Blueprints for your configurations and apply top-down desired state management to catch device drift the moment it happens, instead of making it a periodic cleanup task.

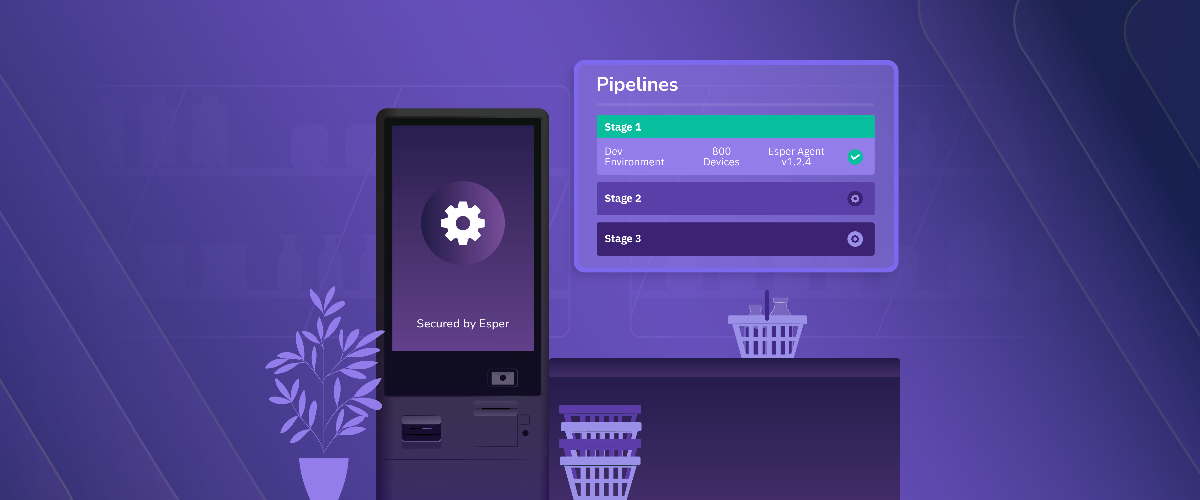

- Test and stage rollouts: Isolate your device lab, build test groups, and deploy in stages using Pipelines.

- Rollback and self-healing: Identify and automate checks for operational failure modes after updates or configuration changes. If a device fails, automatically roll it back to the last known good state.

These are really the “end game” — sophisticated and highly automated processes that allow teams to sustainably scale fleets while maintaining visibility and minimizing alert fatigue. When you start treating your fleet as a dynamic and modular entity (instead of a list of green-light / red-light endpoints), you start opening new doors. You improve operational resilience, you increase agility, and you give teams the bandwidth to innovate.

It’s not a short or easy journey to get here, but once you arrive, it’s going to be obvious it was well worth the effort.

Keep Business Operations as Your North Star

When you’re managing a highly heterogeneous and complex enterprise device fleet, ticket triage can easily become your entire working model. The spigot is always on full blast, and a “better safe than sorry” culture means IT teams end up with far more information than they can possibly hope to ingest. It’s exhausting, and restricting the flow of alerts can understandably provoke anxiety.

As we’ve explored above, it’s critical to push past that anxiety. When you sit down and really ask “Does getting this alert actually help us?,” you can start shifting attitudes. By narrowing the alert focus to the actionable — those nonrecoverable states — you’ll provoke conversations about what purposes the current alerting regime serves, and where your visibility and automation gaps lay. It doesn’t mean those gaps will go away overnight simply for being exposed, but it does highlight the need of almost all scaled organizations to shift to more modern modes of device management.

Report: The State of Device Management >